Convolutional Neural Networks, commonly known as CNNs, are one of the most transformative technologies in the world of artificial intelligence. They enable machines to interpret visual data with remarkable accuracy, powering applications such as facial recognition, self-driving cars, medical imaging, and security systems.

If you have ever used Google Photos to search for your pet or face without manually tagging images, you have already experienced the impact of CNNs. These deep learning systems analyze and categorize image data by identifying patterns and structures just as the human brain does.

What Is CNN in Deep Learning?

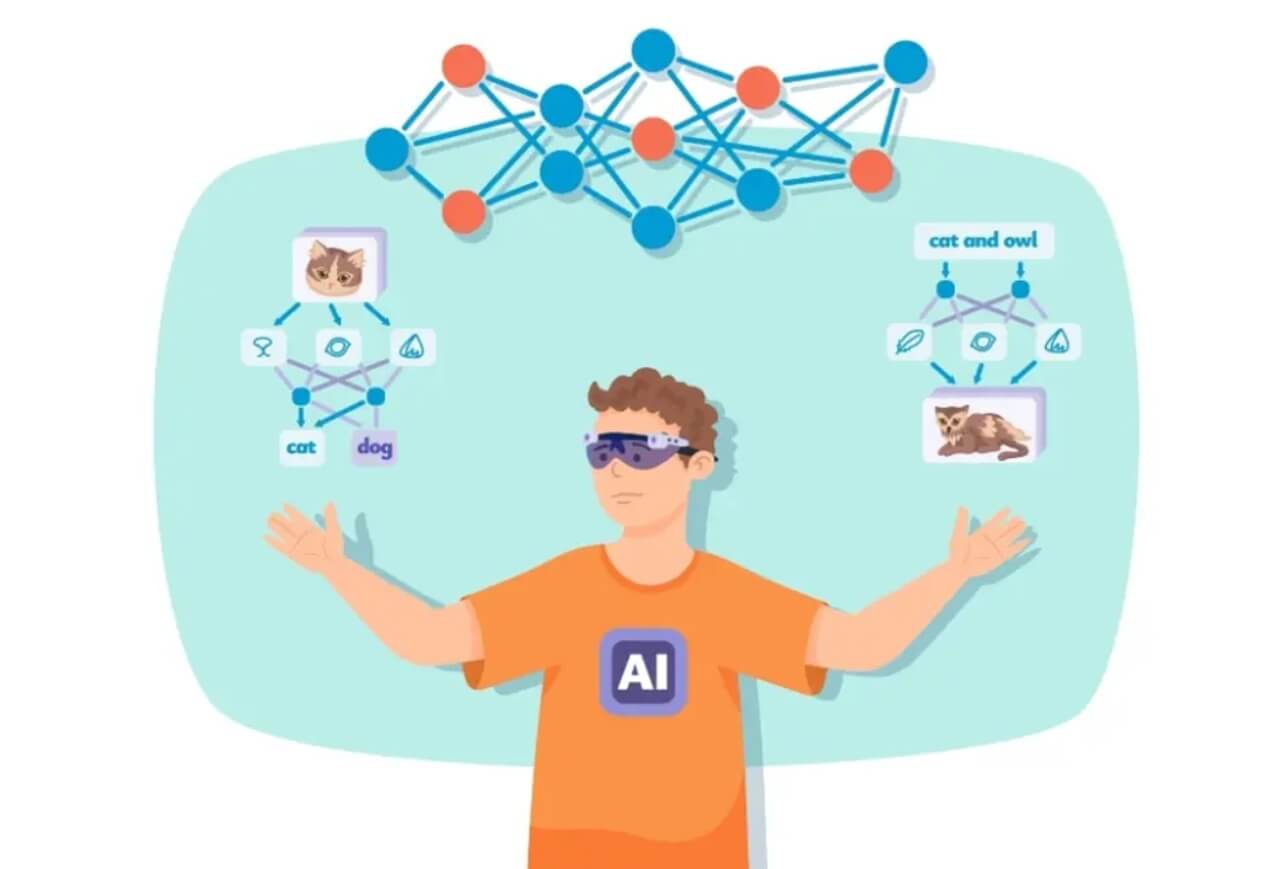

A Convolutional Neural Network (CNN) is a specialized neural network architecture designed to process grid-based data, particularly images. Unlike traditional neural networks that analyze each pixel independently—an approach that requires massive computation—CNNs detect patterns by looking at groups of pixels, making them highly efficient and accurate.

Instead of processing an image pixel by pixel, CNNs learn to recognize features such as edges, curves, shapes, and textures. With each layer, the network gains a deeper understanding until it can accurately recognize full objects like faces, animals, or traffic signs. This layered learning approach mirrors the way the human visual system works.

How Do CNNs Work?

CNNs consist of several key layers, each performing a crucial function:

1. Convolutional Layer

This layer uses small filters that slide across the image to find patterns such as edges and basic shapes. It performs mathematical operations to detect whether certain features exist.

2. Pooling Layer

This layer reduces the spatial size of feature maps, keeping only the most important information. Pooling lowers the computational cost and prevents overfitting.

3. Fully Connected Layer

The final layer interprets all extracted features and classifies the image. For example, a trained CNN may determine: “This image contains a dog.”

The model continuously improves through backpropagation, adjusting internal parameters each time it compares predictions with correct answers.

Who Invented CNNs?

The history of CNNs dates back to 1980, when Japanese scientist Kunihiko Fukushima introduced the Neocognitron, one of the earliest models capable of visual pattern recognition. However, modern CNN technology was pioneered by Yann LeCun in 1989, who introduced a CNN system capable of recognizing handwritten characters.

The breakthrough moment arrived in 2012, when researchers Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton built AlexNet, a CNN that dramatically outperformed all other image recognition systems in the ImageNet competition. This victory launched the deep learning revolution.

Training and Real-World Applications

CNNs require large datasets to learn effectively. By training on millions of labeled images, they learn to classify new images with high accuracy. Today, CNNs are used in:

Medical diagnostics (detecting tumors in MRI scans)

Autonomous vehicles (recognizing pedestrians and traffic signs)

Face and object recognition

Robotics and surveillance

Retail and e-commerce visual search

The Future of CNNs

While CNNs remain foundational in computer vision, newer architectures like Vision Transformers (ViT) are gaining momentum. ViTs can outperform CNNs on many tasks but require significantly more computing power. CNNs continue to dominate edge devices like smartphones and IoT systems, where resources are limited.

Despite rapid innovation, CNNs remain a cornerstone of AI, enabling machines to see and understand the world with unprecedented accuracy.